Exporting a Lottie Animation to Video Using Media3

Summary

Exporting a Lottie animation to MP4 — with audio — unlocks a ton for creative and media-heavy apps. From story editors to motion-graphics tools, turning Lottie scenes into shareable video clips lets users post anywhere. It’s a common pattern in successful template-driven apps—think Unfold, InStories, and Storybeat—where users start from animated templates and customize them with photos, text, or video.

This post walks you through a clean and performant pipeline that renders Lottie to video using the Media3 Transformer API and OpenGL — and adds audio support along the way.

What We’ll Build

We’ll implement a function:

suspend fun recordLottieToVideo(

context: Context,

lottieFrameFactory: LottieFrameFactory,

audioUri: String,

outputFilePath: String,

onProgress: (Float) -> Unit = {},

onSuccess: (fileSize: Long) -> Unit = {},

onError: (Throwable) -> Unit = {},

durationMs: Long,

frameRate: Int = 30,

width: Int = 1080,

height: Int = 1920

)

This function:

- Renders Lottie frames to GPU textures

- Encodes them to video using Media3

- Adds an audio track via raw PCM decoding

- Outputs a complete

.mp4file

The Problem: Bridging Two Worlds

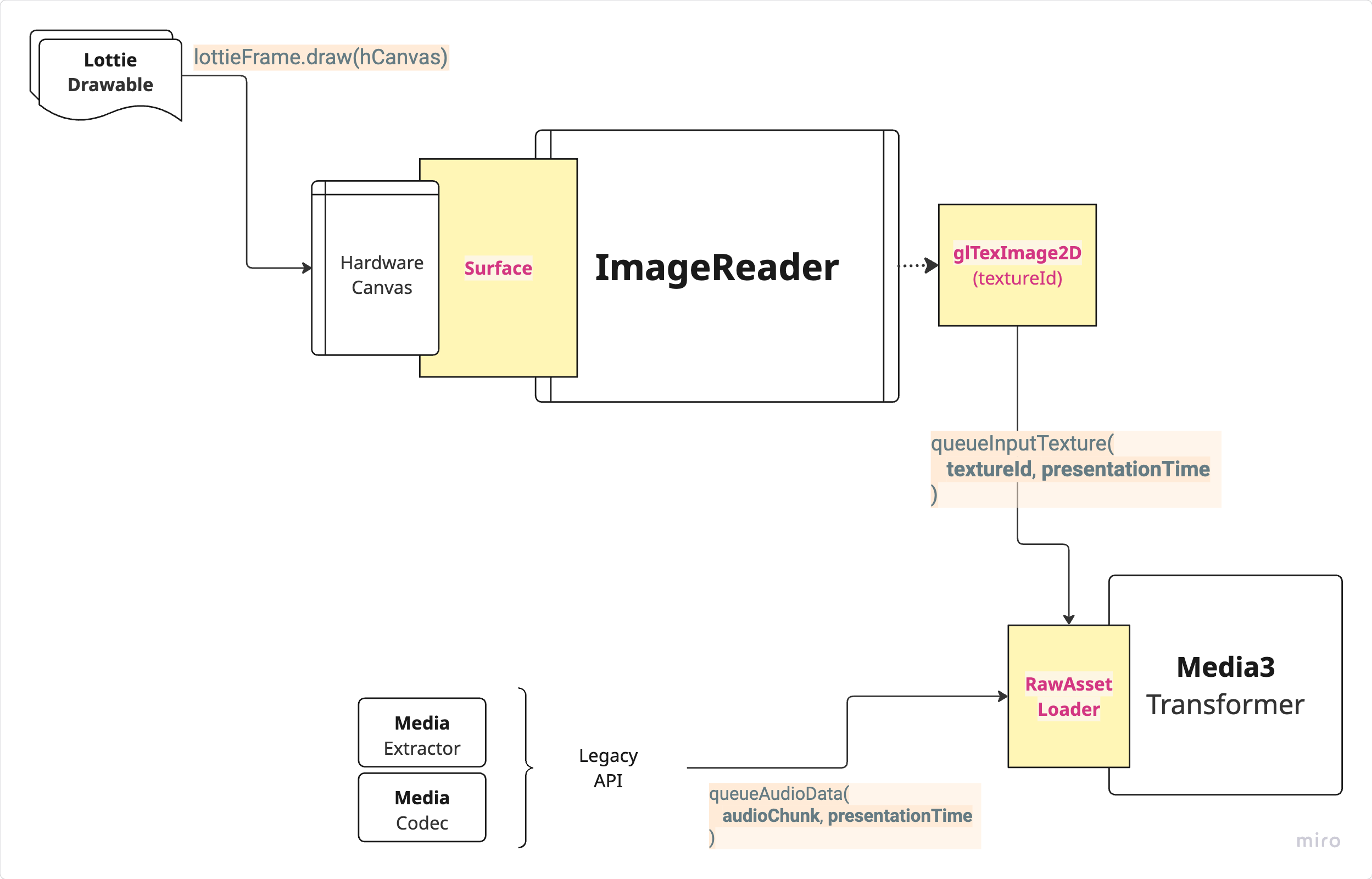

The solution to exporting Lottie animations with audio using Media3 comes down to connecting two seemingly unrelated pieces:

-

On one side, we have

LottieDrawable, a regular Android Drawable generated by Lottie that can draw animations onto any Canvas, including hardware-accelerated ones. -

On the other side, we have Media3’s

Transformer, which can be configured with aRawAssetLoaderto receive custom video frames (as OpenGL texture IDs) and raw audio chunks.

But how do you go from a LottieDrawable — something that draws on a Canvas — to an OpenGL texture ID that Media3 can encode?

The missing link is ImageReader. It provides a Surface that:

-

Can be drawn to using lockHardwareCanvas() (GPU-accelerated)

-

Provides Image frames that can be uploaded to the GPU as textures

This makes it the perfect bridge between the CPU-based Lottie drawing world and the GPU-based Media3 encoding pipeline.

Control Everything with RawAssetLoader

The RawAssetLoader gives you full manual control over how video and audio data is fed into the Media3 encoder:

.setAssetLoaderFactory(

RawAssetLoaderFactory(

audioFormat = audioFormat,

videoFormat = videoFormat,

onRawAssetLoaderCreated = { loader ->

rawAssetLoaderDeferred.complete(loader)

}

)

)

From here on, you’re responsible for queuing:

- OpenGL texture IDs with timestamps

- Raw PCM audio chunks with timestamps

Render Lottie to a Hardware Canvas

Each frame of the Lottie animation is generated as a LottieDrawable. The most performant way to get that drawable into a video frame is to draw it into a hardware-accelerated canvas — not into a regular software Bitmap.

val hCanvas = imageReader.surface.lockHardwareCanvas()

try {

lottieFrame.setBounds(0, 0, videoWidth, videoHeight)

lottieFrame.draw(hCanvas)

} finally {

imageReader.surface.unlockCanvasAndPost(hCanvas)

}

This approach is not just efficient — it’s the key to real-time performance. By using lockHardwareCanvas() from an ImageReader.Surface, you automatically get a hardware-backed Canvas that is connected to GPU memory.

✅ Does Lottie use the GPU here?

Yes! Internally, LottieDrawable.draw(Canvas) chooses between a software (renderAndDrawAsBitmap) and hardware (drawDirectlyToCanvas) rendering path.

If the Canvas is hardware-accelerated — as it is when created from lockHardwareCanvas() — Lottie will automatically use GPU rendering via drawDirectlyToCanvas().

Upload Frame as Texture via ImageReader

The unsung hero here is ImageReader. It provides the surface we draw to, and then lets us acquire the drawn image for use with OpenGL:

val imageReader = ImageReader.newInstance(width, height, PixelFormat.RGBA_8888, maxImages)

Once a frame is drawn, we acquire the image, upload it to an OpenGL texture and and enqueue the texture id returned into the RawAssetLoader:

val image = awaitForLastImage.await()

val textureId = uploadImageToGLTexture(image)

rawAssetLoader.queueInputTexture(textureId, presentationTimeUs)

This texture now represents a video frame and will be encoded by Media3 Transformer.

Decode and Enqueue Audio

In parallel, we decode audio from a URI into raw PCM chunks using a custom AudioDecoder. Each chunk is queued manually into the asset loader:

val (audioChunk, presentationTimeUs) = awaitForAudioChunk.await()

rawAssetLoader.queueAudioData(audioChunk, presentationTimeUs, isLast)

The audio chunks can be read from a local audio file using the MediaExtractor and the MediaCodec APIs and creating a pipeline of small bufferes that are extracted, decoded and then passd to the RawAssetLoader’s callback. Synchronization between video frames and audio chunks is manual — but simple, since you’re in full control.

Bringing It All Together

launch {

repeat(totalFrames) { frameIndex ->

// 1. Draw Lottie frame into hardware canvas

// 2. Acquire image from ImageReader

// 3. Upload image to GL texture

// 4. Enqueue texture into Media3

}

rawAssetLoader.signalEndOfVideoInput()

}

launch {

while (!endOfStream) {

// 1. Decode next PCM chunk

// 2. Enqueue it to Media3

}

rawAssetLoader.signalEndOfAudioInput()

}

The full solution can be found in the Github repository: LottieRecorder

In the example code, I have used CompletableDeferred interfaces as semaphores to suspend the thread and wait for the corresponding results in a typesafe way. It removes some callbacks and makes the code easier to wrap inside a use case that returns a coroutine Flow.

Why This Approach Works

RawAssetLoadergives full audio+video controlImageReaderis optimized for canvas-to-texture uselockHardwareCanvas()ensures GPU memory drawingLottieDrawablealready handles GPU rendering internally when used on a hardware canvas- The solution uses as less custom code as possible, making easier to maintain across multiple device models

Future Improvements

- Use a

SurfaceTexturebound to an OES external texture, draw to itsSurfacewith lockHardwareCanvas(), then sample that external texture directly in GL for near zero-copy (no CPU readbacks)—but expect trickier setup/synchronization and test broadly. - Investigate using Skottie (Skia GPU Lottie renderer) for even faster native rendering